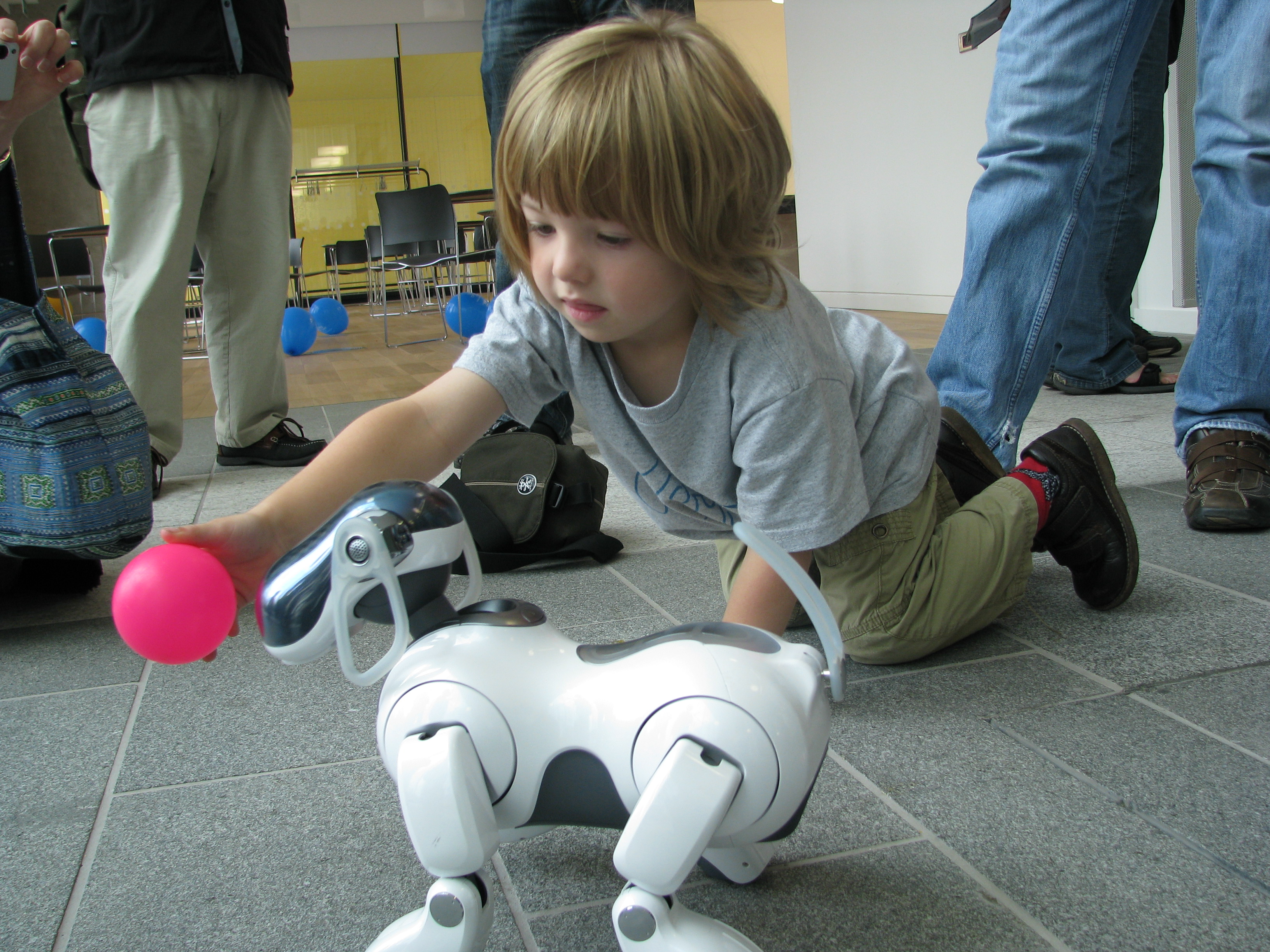

Imagine a young lady in a crowd, being followed with an intentional, focused stare as she goes about her day, unaware of what thoughts are going on in the head of her pursuer, a social outcast with poor social skills and an unnatural demeanour. Imagine again if this character is a robot and its eyes are the surveillance system around the woman.

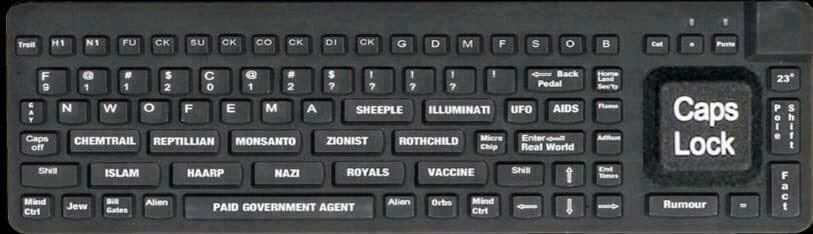

The TV program

A Current Affair revealed the inevitable and unsurprising story that at one particular shopping centre, security guards were perving on women by watching what the surveillance cameras were seeing, sometimes even saving the images. The outrage was one side of the story, but there was an elephant in the room − how is this any different to the security guard walking out of his office (

he because I’m presuming the security guard fits the Hollywood cliché of being a bureaucratic, lazy, heterosexual man) and following the woman himself? A Current Affair even showed an incident of this happening, but left it at that without discussing the implications.

|

| Paul Blart, Mall Cop − a typical security guard? © Columbia Pictures |

Society accepts the inevitability that attractive women walking through a public place are going to catch the eyes of heterosexual men. In Western society, this view and its associated appreciation are deemed acceptable, as long as it stops there. Men have moral bounds imposed on any potential escalation, as well as laws involving rape, stalking and photographing. Islamic countries also place bounds on this scenario, with participation from the women.

Digital pictures have long been ignored by the law and even society’s demand for new laws. This is perhaps because the lack of physical presence prevents many of the potential bad scenarios. There is less intimidation from a creepy old man flashing his penis online vs in a park. Ideally though, these inconsistencies should not be accepted by society. A pervert flashing his penis or stalking a woman via cameras should be treated no differently to a pervert doing it in

meatspace.

The terrible state of society’s perception of cameras is largely because they’re not aware of what’s possible. The state of the art is not just the recognition of faces, but the 3D reconstruction of visible objects and the recognition of meaningful activities, like people falling over, beer being drunk or bags being left unattended. The footage of you is useful to your robotic personal assistants. By being able to watch you, robotic assistants could measure how much alcohol you’ve drunk; they could track your mood throughout the day; or they could command doors to open for you as you approach.

Imperfect, corruptible humans are not actually all that necessary for overseeing security cameras.

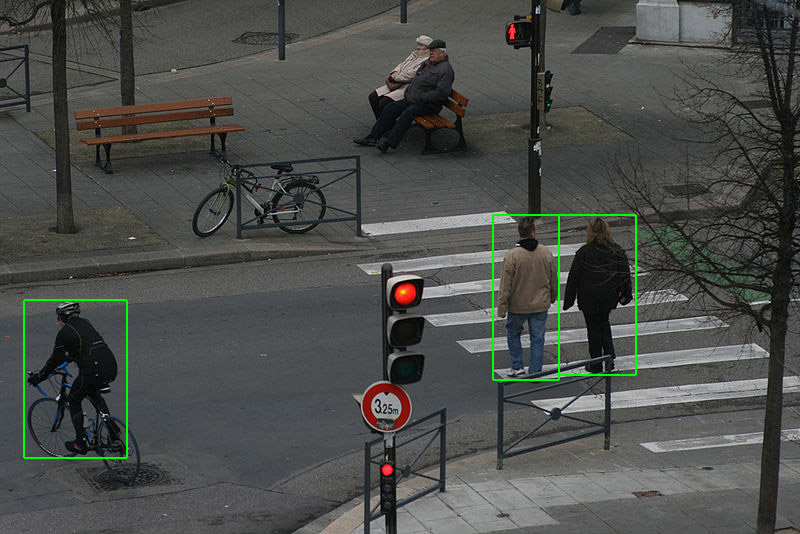

Computer vision can handle most tasks of a surveillance system and can alter the images appropriately if humans become necessary. Cameras watching the eyes of the guards could determine where they’re watching, give them a glance at a person, then blur that person out. The glance only needs to be long enough to get an impression of the person and to perhaps recognise them from earlier in the guard’s life. If they need a second look, brain scanners (

eg) could detect that they want a look and could detect if the reason is simply to ogle. The event could also be logged.

You should realistically have easy access to all images and video of yourself. In fact, I would argue that all people in your user group should have access to images and video of you. Regardless if it was a human or robotic eye, parents should for instance be able to see their children in a hallway; diners should be able to see other diners in the restaurant at the same time as them; people in a public square should be visible to police and citizens; and you should be able to see yourself in the mirror. This is arguably what society has already deemed socially acceptable when applied to phone footage: there are popular paradigms like “stop filming and following me”, “what happens in Vegas stays in Vegas” and “we’re allowed to film police officers”. Not all cameras, but certainly all surveillance systems, should be forced to make their footage available with great ease to anyone with permission to see it.

The easy access to images by those entitled to see it would not just enhance the ability of robotic assistants, but would assist those who already eventually get access to the images. In the NSW

Coroner’s report on the death of Roberto Laudisio Curti for instance, Jeremy Gormly submitted, "it is hard to avoid commenting on the enormous value to this investigation of the various electronic recording systems, particularly CCTV, throughout the city, installed both by the City of Sydney Council and by a very large number of businesses. The cameras, despite at times showing only fleeting images, enabled Roberto’s route and the timing of his movements to be determined. The time saved has been remarkable, but the greatest benefit was to be able to establish precisely places, times and actions which might otherwise have remained speculative."

|

| Roberto Curti being chased by police. Source |

The government has been placed responsible for managing stalkers, so it is the one that has the obligation here to act. The government is

grossly failing to take advantage of progress in computer science and in regards to surveillance, the government is the logical middleman for handling user groups of permission and the granting of access to surveillance footage. In the restaurant example for instance, the restaurant could detect the MAC address of a diner’s phone and submit that to the government, along with the surveillance footage. The diner, their robotic assistants and others in the diner’s permitted user group could then access a version of the footage, for example one with everyone else’s identity blurred.

Imagine if the Lindt café had been using such an access control system for its surveillance footage − the police could’ve seen all the footage live and quickly determined that there was no second gunman and that there probably wasn’t a bomb in his bag.

Some would be uneasy about increasing people’s rights to digital images, especially on the basis of perversion. It must be remembered though that we can’t stop people with a photographic memory from drawing a picture of what they saw. We can also not stop someone with a bionic eye from saving a clear image of exactly what they saw. Mozart for instance

transcribed the pope’s secret song after hearing it. Enabling greater access to imagery would significantly enhance the abilities of our robotic assistants (or enhance the machine portion of our cyborg selves, depending on one’s philosophical point of view).